Notes on Papers | AlphaEvolve: A Gemini-powered coding agent for designing advanced algorithms

The following are my notes on the AlphaEvolve paper by AlphaEvolve Team from Google.

Project Overview

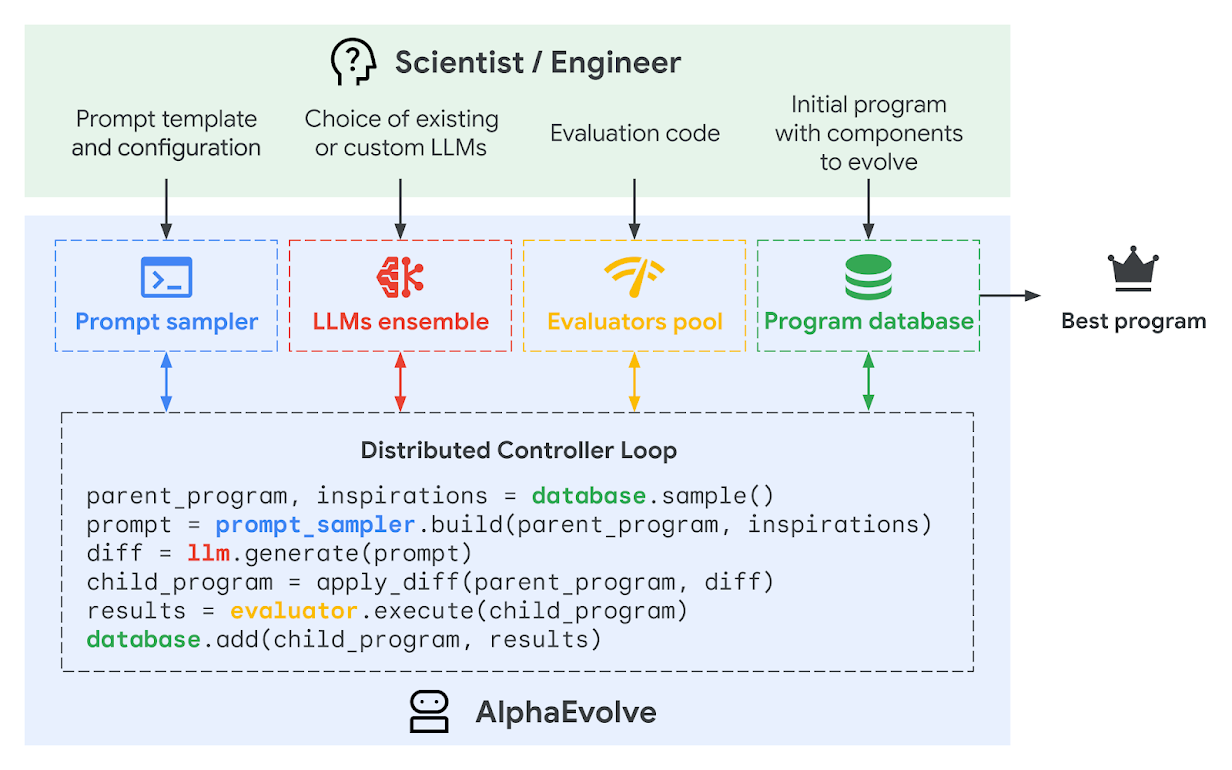

Diagram showing how the prompt sampler first assembles a prompt for the language models, which then generate new programs. These programs are evaluated by evaluators and stored in the programs database. This database implements an evolutionary algorithm that determines which programs will be used for future prompts.

AlphaEvolve Impact:

-

Improving data center scheduling:

AlphaEvolve discovered a simple yet remarkably effective heuristic to help Borg orchestrate Google's vast data centers more efficiently. This solution, now in production for over a year (article published in May 2025), continuously recovers, on average, 0.7% of Google’s worldwide compute resources. -

Assisting in hardware design:

AlphaEvolve proposed a Verilog rewrite that removed unnecessary bits in a key, highly optimized arithmetic circuit for matrix multiplication. Crucially, the proposal must pass robust verification methods to confirm that the modified circuit maintains functional correctness. -

Enhancing AI training and inference:

AlphaEvolve is accelerating AI performance and research velocity. By finding smarter ways to divide a large matrix multiplication operation into more manageable subproblems, it sped up this vital kernel in Gemini’s architecture by 23%, leading to a 1% reduction in Gemini's training time. Because developing generative AI models requires substantial computing resources, every efficiency gained translates to considerable savings.

AlphaEvolve achieved up to a 32.5% speedup for the FlashAttentionkernel implementation in Transformer-based AI models.

My Notes: